vSAN host fails to enter Maintenance Mode with 'Full data migration' option selected

Article ID: 326857

Updated On:

Products

VMware vSAN

Issue/Introduction

Symptoms:

-

A host will fail to enter maintenance mode using Full Data Evacuation if the cluster is not able to meet the minimum number of fault domains that the storage policy requires which is N+1.

-

Unable to decommission the node from 3 node vSAN cluster.

- Placing host in Full Data Migration fails with error as:"

1 more standalone host is required. Some objects will become inaccessible or non-complaint with their storage policy"

Awareness of vSAN cluster requirements.

The task using Full Data Evacuation will also hang and/or fail when there is insufficient space to migrate all of the data to other hosts.

Environment

VMware vSAN 8.x

VMware vSAN 7.x

VMware vSAN 6.x

VMware vSAN 7.x

VMware vSAN 6.x

Cause

vSAN requires a certain number of hosts to be active with disk groups contributing capacity and resources in vSAN in order to provide fault tolerance. If the requirements cannot be met, vSAN will fail the pre-check it performs when placing a host into maintenance mode.

See the below documentation for further information:

Managing Fault Domains in Virtual SAN Clusters

Fault Domains

When entering Maintenance Mode a "What-if" scenario will be run. If the result is, that after entering Maintenance Mode only not enough Fault Domains will be available the Full Data migration will fail.

The clomd.log will provide the related error message:

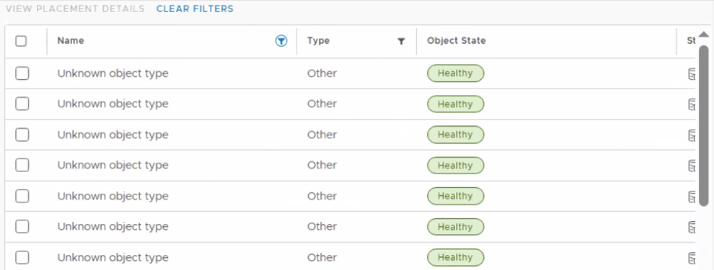

Even if the Storage policy has changed on the VMs to match the fault domains available after placing a host in maintenance mode with "Full Data Migration", there may be unknown, unassociated and inaccessible vSAN objects whose storage policy remains same, and this causes the maintenance mode task to fail.

See the below documentation for further information:

Managing Fault Domains in Virtual SAN Clusters

Fault Domains

This error can also be seen if there is any hard disk of any VM which is configured with the improper storage policy. If after putting the host in maintenance mode, the object placement requirements are not fulfilled, this error is expected.

When entering Maintenance Mode a "What-if" scenario will be run. If the result is, that after entering Maintenance Mode only not enough Fault Domains will be available the Full Data migration will fail.

The clomd.log will provide the related error message:

LOM_CheckClusterResourcesForPolicy: Not enough Upper FD's available. Available: 3, needed: 4LOM_CheckClusterResourcesForPolicy: Not enough Upper FD's available. Available: 4, needed: 5For space related inability to enter with Full Data Evacuation the "What-if" will also indicate the task will fail.

Resolution

For fault domain related failure:

If full data migration is needed, then a 5th host for RAID5, 4th host for RAID1 or 7th host for RAID6 is required to be added to the vSAN cluster first.

* Otherwise the Storage Policy can be changed to FTT=1,FTM=RAID1

* Otherwise the Storage Policy can be changed to FTT=1,FTM=RAID1

If there is any hard disk configured with improper storage policy, we will need to correct that to fix the issue.

For unknown and unassociated vSAN objects:

Changing the storage policy for these objects is not available in vSphere Client.

If this is the case please raise a case with Broadcom Technical Support

Please be aware that during the change from RAID5 or RAID6 to RAID1, or from Raid 6 to Raid 5, new components will be created first and the old ones deleted once the rebuild has completed. Make sure that there is sufficient space in the cluster before proceeding.

Please be aware that during the change from RAID5 or RAID6 to RAID1, or from Raid 6 to Raid 5, new components will be created first and the old ones deleted once the rebuild has completed. Make sure that there is sufficient space in the cluster before proceeding.

For Inaccessible vSAN objects:

try to bring the objects back to the healthy state, If it is stale validate and delete - inaccessible virtual objects on vSAN cluster.

Workaround:

Select Maintenance Mode 'Ensure Accessibility' option - note that all data using RAID5/6 Storage Policies will be in a reduced redundancy state until the host has exited Maintenance Mode and the data resynced back to compliance.

For insufficient space related failure:

Add additional capacity to the cluster to allow data to be rebuilt. This can be via adding additional host(s) or capacity to existing hosts.

Add additional capacity to the cluster to allow data to be rebuilt. This can be via adding additional host(s) or capacity to existing hosts.

Workaround:

Select Maintenance Mode 'Ensure Accessibility' option - note that all data with components on the host in maintenance mode will be in a reduced redundancy state until the host has exited Maintenance Mode and the data resynced back to compliance.

Select Maintenance Mode 'Ensure Accessibility' option - note that all data with components on the host in maintenance mode will be in a reduced redundancy state until the host has exited Maintenance Mode and the data resynced back to compliance.

For vSwap related Objects :

The recommendation will be to power off and power on the virtual machine .

In case of production workloads where it may not be possible to power off the virtual machine ,the policy on the swap objects may be changed using the below command .

/usr/lib/vmware/osfs/bin/objtool setPolicy -u < UUID > -p "((\"proportionalCapacity\" i0)(\"hostFailuresToTolerate\" i0))" > Raid 0 ./usr/lib/vmware/osfs/bin/objtool setPolicy -u < UUID > -p "((\"proportionalCapacity\" i0)(\"hostFailuresToTolerate\" i1))" > Raid 1 ./usr/lib/vmware/osfs/bin/objtool setPolicy -u < UUID > -p "((\"stripeWidth\" i1) (\"cacheReservation\" i0) (\"proportionalCapacity\" i0) (\"hostFailuresToTolerate\" i1) (\"forceProvisioning\" i0) (\"replicaPreference\" \"Capacity\") (\"iopsLimit\" i0) (\"checksumDisabled\" i0))" > Raid 5 ./usr/lib/vmware/osfs/bin/objtool setPolicy -u < UUID > -p "((\"stripeWidth\" i1) (\"cacheReservation\" i0) (\"proportionalCapacity\" i0) (\"hostFailuresToTolerate\" i2) (\"forceProvisioning\" i0) (\"replicaPreference\" \"Capacity\") (\"iopsLimit\" i0) (\"checksumDisabled\" i0))" > Riad 6 .Additional Information

Feedback

Yes

No