Maintenance Mode % progress stages in vSAN nodes.

Article ID: 326563

Updated On:

Products

VMware vSAN

Issue/Introduction

This article provides information related to processes taking place while a vSAN node is entering Maintenance Mode and troubleshooting directions related to each stage.

vSAN-enabled ESXi hosts can take a long time or fail to progress past specific % job completion.

Symptoms:

vSAN-enabled ESXi hosts can take a long time or fail to progress past specific % job completion.

Environment

VMware vSAN 7.0.x

VMware vSAN 8.0.x

Cause

Putting a vSAN-enabled ESXi host into Maintenance Mode has a number of implications and the processes that occur can vary depending on which Maintenance Mode option is taken (e.g. Full Data Evacuation, Ensure Accessibility or No Action).

Resolution

Summary of what is occurring at each %:

0% - Task initializing

2% - Precheck.

19% - vMotion of VMs off the host.

20%-100% - Resync and/or migration of data onto other nodes.

0% - Task initializing

- If the task is staying at this % for a long duration, this can indicate issues with vCenter or communication issues between vCenter and the hosts.

2% - Precheck.

- Task can hang at this % if there are HA/DRS settings that might prevent the host from entering Maintenance Mode.

19% - vMotion of VMs off the host.

- If the task is stuck at this % then you need to try manually moving the remaining VMs which will indicate why they can't (or shouldn't) migrate in their current state (e.g. passthrough devices such as GPUs, no longer available ISO in CD/DVD device, no VM network available on other hosts, VM disks stored on local-only datastore, Affinity/Anti-Affinity rules, insufficient compute resources on destination hosts (either due to not enough, reservations or HA failover reserved settings)).

20%-100% - Resync and/or migration of data onto other nodes.

- This can take a long time depending on a) the amount of data that needs to be copied to other nodes and b) the available storage resources for component placement.

- Node will have a CMMDS NODE_DECOM_STATE content of "decomState": 4 while this step is occurring

- Further information relating to which Data-Objects still require to be moved can be determined from the list of affected objects in CMMDS ("affObjList")

# cmmds-tool find -t NODE_DECOM_STATE -u <UUID of node> -f json

Additional Information

In addition the vSAN maintenance mode progress can be monitored from the Clomd.log located at /var/log/clomd.log, you will messages similar to this:

2020-12-07T20:33:44.919Z 66970 (182601487232)(opID:0)CLOM_ProcessDecomUpdate: Node 00000000-0000-0000-0000-000000000000 state change. Old:DECOM_STATE_NONE New:DECOM_STATE_ACTIVE Mode:1 JobUuid:00000000-0000-0000-0000-000000000000

2020-12-07T20:33:45.446Z 66970 (182601487232)(opID:0)CLOM_ProcessDecomUpdate: Node 00000000-0000-0000-0000-000000000000 state change. Old:DECOM_STATE_ACTIVE New:DECOM_STATE_INITIALIZED Mode:1 JobUuid:00000000-0000-0000-0000-000000000000

2020-12-07T20:33:45.447Z 66970 (182601487232)(opID:0)CLOM_ProcessDecomUpdate: Node 00000000-0000-0000-0000-000000000000 state change. Old:DECOM_STATE_INITIALIZED New:DECOM_STATE_DOM_READY Mode:1 JobUuid:00000000-0000-0000-0000-000000000000

2020-12-07T20:36:16.244Z 66970 (182601487232)(opID:0)CLOM_CrawlerInit: Starting crawler in CRAWLER_PERIODIC mode

2020-12-07T20:38:16.262Z 66970 Obj b0e0485c-81dc-5b68-b021-0025b501006d has intermediate leaves or is not complete. incompleteCmmdsState: 1. nIntermediateLeafs: 0

2020-12-07T20:44:16.525Z 66970 (182601487232)(opID:0)CLOM_ProcessDecomUpdate: Node 00000000-0000-0000-0000-000000000000 state change. Old:DECOM_STATE_DOM_READY New:DECOM_STATE_PREP_COMPLETE Mode:1 JobUuid:00000000-0000-0000-0000-000000000000

2020-12-07T20:44:25.898Z 66970 (182601487232)(opID:0)CLOM_ProcessDecomUpdate: Node 00000000-0000-0000-0000-000000000000state change. Old:DECOM_STATE_PREP_COMPLETE New:DECOM_STATE_COMPLETE Mode:1 JobUuid:00000000-0000-0000-0000-000000000000

You will see the DECOM_STATE marked as initialized once the progress has started and once has finished the DECOM_STATE will be marked as complete

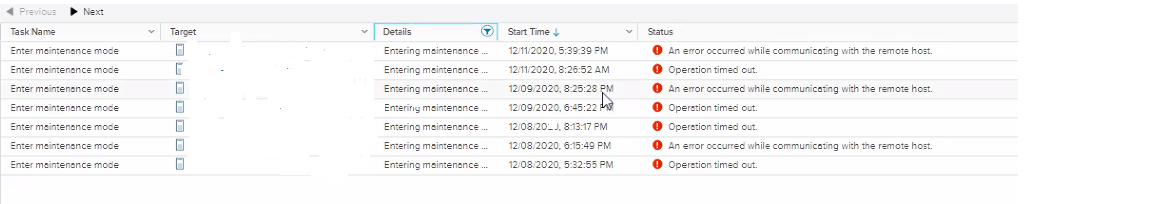

It is also possible for the % progress to get to 68 and time out due to lack of communication with the hosts. Check the Tasks/Events of the host for the below messages:

If you see this check hostd,vobd, and/or vmkernel for any messages related to maintenance mode around the timestamps noted in vCenter. If you don't see any messages related to maintenance mode at this time then the request from vCenter to the host was not received. Try putting the host into maintenance mode via the host UI to confirm the issue is within vCenter.

2020-12-07T20:33:44.919Z 66970 (182601487232)(opID:0)CLOM_ProcessDecomUpdate: Node 00000000-0000-0000-0000-000000000000 state change. Old:DECOM_STATE_NONE New:DECOM_STATE_ACTIVE Mode:1 JobUuid:00000000-0000-0000-0000-000000000000

2020-12-07T20:33:45.446Z 66970 (182601487232)(opID:0)CLOM_ProcessDecomUpdate: Node 00000000-0000-0000-0000-000000000000 state change. Old:DECOM_STATE_ACTIVE New:DECOM_STATE_INITIALIZED Mode:1 JobUuid:00000000-0000-0000-0000-000000000000

2020-12-07T20:33:45.447Z 66970 (182601487232)(opID:0)CLOM_ProcessDecomUpdate: Node 00000000-0000-0000-0000-000000000000 state change. Old:DECOM_STATE_INITIALIZED New:DECOM_STATE_DOM_READY Mode:1 JobUuid:00000000-0000-0000-0000-000000000000

2020-12-07T20:36:16.244Z 66970 (182601487232)(opID:0)CLOM_CrawlerInit: Starting crawler in CRAWLER_PERIODIC mode

2020-12-07T20:38:16.262Z 66970 Obj b0e0485c-81dc-5b68-b021-0025b501006d has intermediate leaves or is not complete. incompleteCmmdsState: 1. nIntermediateLeafs: 0

2020-12-07T20:44:16.525Z 66970 (182601487232)(opID:0)CLOM_ProcessDecomUpdate: Node 00000000-0000-0000-0000-000000000000 state change. Old:DECOM_STATE_DOM_READY New:DECOM_STATE_PREP_COMPLETE Mode:1 JobUuid:00000000-0000-0000-0000-000000000000

2020-12-07T20:44:25.898Z 66970 (182601487232)(opID:0)CLOM_ProcessDecomUpdate: Node 00000000-0000-0000-0000-000000000000state change. Old:DECOM_STATE_PREP_COMPLETE New:DECOM_STATE_COMPLETE Mode:1 JobUuid:00000000-0000-0000-0000-000000000000

You will see the DECOM_STATE marked as initialized once the progress has started and once has finished the DECOM_STATE will be marked as complete

It is also possible for the % progress to get to 68 and time out due to lack of communication with the hosts. Check the Tasks/Events of the host for the below messages:

If you see this check hostd,vobd, and/or vmkernel for any messages related to maintenance mode around the timestamps noted in vCenter. If you don't see any messages related to maintenance mode at this time then the request from vCenter to the host was not received. Try putting the host into maintenance mode via the host UI to confirm the issue is within vCenter.

Feedback

Yes

No