VMware NSX-T Virtual Server/Pool Members DOWN when added to T0/T1 and nginx core file generated on an Edge Node.

Article ID: 322510

Updated On:

Products

Issue/Introduction

- Load Balancer (LB) is reconfigured, and an nginx core file is generated. We can find these core files on the edge node, typically under the root directory, with names similar to the following:

total 454M

-rw-rw-rw- 1 root root 321M Jun 26 12:39 core.nginx.####.gz

-rw-rw-rw- 1 root root 321M Jun 26 12:37 core.nginx.####.gz

- Pool members may report

"Connect to Peer Failure"or"TCP Handshake Timeout". - In

var/log/syslogof the Edge Node you see log entries for "all pool members are down":

2022-12-27T01:22:23.064913+00:00 <edge_name> NSX 6552 LOAD-BALANCER [nsx@6876 comp="nsx-edge" subcomp="lb" s2comp="lb" level="ERROR" errorCode="EDG9999999"] [########-####-####-####-##########34] Operation.Category: 'LbEvent', Operation.Type: 'StatusChange', Obj.Type: 'VirtualServer', Obj.UUID: '########-####-####-####-##########f8', Obj.Name: 'cluster:<name>', Lb.UUID: '########-####-####-####-##########34', Lb.Name: '<LB_LBname>', Status.NewStatus: 'Down', Status.Msg: 'all pool members are down'.

- The LB CONF process for the LB instance is not running, this can be confirmed by following the below steps:

example:

root@edge_name:~# ps -ef | grep lb | grep nginx | grep ########-####-####-####-##########a8

lb 9568 9481 0 Jun23 ? 00:00:00 /opt/vmware/nsx-edge/bin/nginx -u ########-####-####-####-##########a8 -g daemon off;

Note: Execute get load-balancer from the admin CLI of the active Edge Node, to retrieve the LB UUID. In the above example the LB UUID is ########-####-####-####-##########a8.

2. Use the nginx process ID (9568, as highlighted above) in the following command to confirm it has a LB CONF process running, if there is no output to the above command, there is no process running and the issue has been encountered.

ps -ef | grep <nginx process ID>| grep CONF

example:Impactedroot@edge02:~# ps -ef | grep 9568 | grep CONF

Not impactedroot@edge02:~# ps -ef | grep 9568 | grep CONFlb 9572 9568 0 Jun23 ? 00:00:06 nginx: LB CONF process

NOTE: The preceding log excerpts are only examples. Date, time and environmental variables may vary depending on your environment.

Environment

Cause

Resolution

This issue is resolved in below NSX versions available at Broadcom downloads.

VMware NSX-T Data Center 3.2.3 and later.

VMware NSX 4.1.1 and later.

If you are having difficulty finding and downloading software, please review the Download Broadcom products and software KB.

Workaround:

Restart the Edge Node to fail over services to standby node.

OR

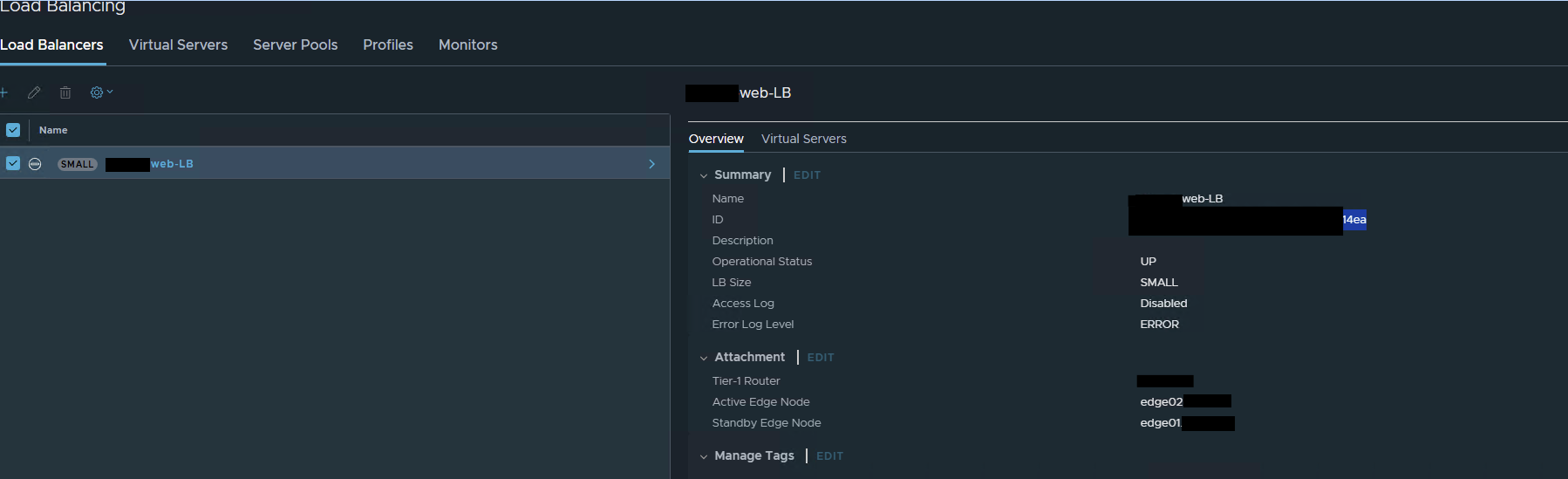

Navigate to Networking > Load Balancers (to gather LB UUID). In the below example : ID ending with 14ea is the UUID (full UUID is masked)

Restart the docker of this LB instance using the below command ran from the CLI as root on the edge node:

- #docker ps | grep <LB_UUID>

- #docker restart <CONTAINER ID>

root@edge_name:~# docker ps | grep <LB_UUID>

126fa3da65e3 nsx-edge-lb:current "/opt/vmware/edge/lb_<LB_UUID>" 2 days ago Up 2 days service_lb_<lb_uuid>

root@edge_name:~# docker restart 126fa3da65e3

Additional Information

You can confirm the docker status using the below command to check all the containers' status including the LB container running time using the below command from NSX Edge's root bash shell :

docker psExample :

root@edge:~# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

9564####bd78 nsx-edge-frr:current "/opt/vmware/edge/fr…" 12 days ago Up 12 days service_frr

d102####036c nsx-edge-base:current "/bin/sleep infinity" 12 days ago Up 12 days 22/tcp plr_sr

476f####67bb nsx-edge-mdproxy:current "/opt/vmware/edge/md…" 12 days ago Up 12 days service_md_proxy

abcb####8c91 nsx-edge-base:current "/bin/sleep infinity" 12 days ago Up 12 days 22/tcp mdproxy

a681####9352 nsx-edge-dhcp:current "/opt/vmware/edge/dh…" 12 days ago Up 12 days service_dhcp

044c####82dc nsx-edge-mdproxy:current "/opt/vmware/edge/md…" 12 days ago Up 12 days service_md_agent

c957####f87b nsx-edge-dispatcher:current "/opt/vmware/edge/lb…" 12 days ago Up 12 days service_dispatcher

5451####63ef nsx-edge-datapath:current "/opt/vmware/edge/dp…" 12 days ago Up 12 days service_datapath

1071####ab35 nsx-edge-nsxa:current "/opt/vmware/edge/ns…" 12 days ago Up 12 days service_nsxa