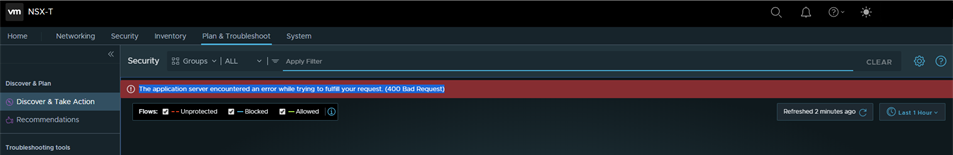

Discover & Take Action page displays "The application server encountered an error while trying to fulfill your request. (400 Bad Request) "

Article ID: 319021

Updated On:

Products

VMware NSX

Issue/Introduction

Symptoms:

Note: further occurrences of HTTP request parsing errors will be logged at DEBUG level.

java.lang.IllegalArgumentException: Request header is too large

- Discover & Take Action page displays "The application server encountered an error while trying to fulfill your request. (400 Bad Request) "

- The issue may occur with some LDAP or vIDM users accounts but not all. The affected users are part of many groups.

- NSX-T Intelligence logs (pace-server.log) display message(s) similar to:

Note: further occurrences of HTTP request parsing errors will be logged at DEBUG level.

java.lang.IllegalArgumentException: Request header is too large

Environment

VMware NSX-T

Cause

This issue is caused by large HTTP header size which the NSX-T Intelligence appliance cannot process. It occurs when a LDAP or vIDM user is part of many groups.

Resolution

This issue will be resolved in a later Intelligence release.

Workaround:

Workaround to manually increase max http header size on 3.2 and later versions:

1. Edit the workload configmap:

$ kubectl --kubeconfig <kubeconfig file> edit cm workload-app -n nsxi-platform

The file location will depend on where NAPP was deployed. It could be '~/.kube/config' on an NSX Manager node.

2. Add the following line under the 'server' section in the configmap:

max-http-header-size: 65535

3. Delete the current workload pod

To identify the workload pod run the following command. There should be one pod beginning with 'workload':

$ kubectl --kubeconfig <kubeconfig file> get pod -n nsxi-platform

Then delete the workload pod:

$ kubectl --kubeconfig <kubeconfig file> delete pod <workload pod name> -n nsxi-platform

After the new workload pod comes up automatically, the issue should be resolved.

Workaround on versions before 3.2:

Either reduce the number of groups for the affected users or increase the maximum http header size setting on the NSX-T Intelligence appliance using the steps below:

1. Login as root on the NSX-T Intelligence appliance.

2. Backup the pace configuration file:

#cp /opt/vmware/pace/config/pace-server-application.properties /opt/vmware/pace/config/pace-server-application.properties.bck

3. Edit the pace configuration file:

#vi /opt/vmware/pace/config/pace-server-application.properties

Add the following line at the end of the file:

server.max-http-header-size=65535

4. Backup the nginx configuration file

# cp /etc/nginx/sites-enabled/ui-service.conf /tmp/ui-service.conf.bck

5. Edit the nginx configuration file

# vi /etc/nginx/sites-enabled/ui-service.conf

Add the following line at line number 29 right below "server_name_;"

large_client_header_buffers 4 65k;

6. Restart related services .

# service nginx restart

# service pace-server restart

7. Check the UI.

Note: this setting is persistent across reboots.

Workaround:

Workaround to manually increase max http header size on 3.2 and later versions:

1. Edit the workload configmap:

$ kubectl --kubeconfig <kubeconfig file> edit cm workload-app -n nsxi-platform

The file location will depend on where NAPP was deployed. It could be '~/.kube/config' on an NSX Manager node.

2. Add the following line under the 'server' section in the configmap:

max-http-header-size: 65535

3. Delete the current workload pod

To identify the workload pod run the following command. There should be one pod beginning with 'workload':

$ kubectl --kubeconfig <kubeconfig file> get pod -n nsxi-platform

Then delete the workload pod:

$ kubectl --kubeconfig <kubeconfig file> delete pod <workload pod name> -n nsxi-platform

After the new workload pod comes up automatically, the issue should be resolved.

Workaround on versions before 3.2:

Either reduce the number of groups for the affected users or increase the maximum http header size setting on the NSX-T Intelligence appliance using the steps below:

1. Login as root on the NSX-T Intelligence appliance.

2. Backup the pace configuration file:

#cp /opt/vmware/pace/config/pace-server-application.properties /opt/vmware/pace/config/pace-server-application.properties.bck

3. Edit the pace configuration file:

#vi /opt/vmware/pace/config/pace-server-application.properties

Add the following line at the end of the file:

server.max-http-header-size=65535

4. Backup the nginx configuration file

# cp /etc/nginx/sites-enabled/ui-service.conf /tmp/ui-service.conf.bck

5. Edit the nginx configuration file

# vi /etc/nginx/sites-enabled/ui-service.conf

Add the following line at line number 29 right below "server_name_;"

large_client_header_buffers 4 65k;

6. Restart related services .

# service nginx restart

# service pace-server restart

7. Check the UI.

Note: this setting is persistent across reboots.

Feedback

Yes

No