The creation of the first TKC failed, the process timed-out while waiting for Service Engine creation in AVI.

Article ID: 313097

Updated On:

Products

VMware vSphere ESXi

VMware vSphere Kubernetes Service

Issue/Introduction

Symptoms:

When we freshly enable WCP, ie. when the supervisor cluster is being created, Tanzu will call AVI to spin up 2 x SE to host the virtual service for cluster api mgmt (in case of active-active mode). That is before we create any guest cluster, there should be only 2 x SE providing service on AVI side.

Now if we try to create a TKC, it won't get created and will timeout because it takes around 10-12 minutes to get a new SE created and be UP and ready on AVI.

Note: If there is already an extra SE, it will be utilised to get the TKC created.

When we freshly enable WCP, ie. when the supervisor cluster is being created, Tanzu will call AVI to spin up 2 x SE to host the virtual service for cluster api mgmt (in case of active-active mode). That is before we create any guest cluster, there should be only 2 x SE providing service on AVI side.

Now if we try to create a TKC, it won't get created and will timeout because it takes around 10-12 minutes to get a new SE created and be UP and ready on AVI.

Note: If there is already an extra SE, it will be utilised to get the TKC created.

Environment

VMware vSphere 7.0 with Tanzu

Cause

It takes around 10-12 minutes for the LB to come up, but our default kubelet timeout is 4 minutes:

This field is in the kubeadm-config:

The 4 minute timeout is the Kubernetes default, not something we set directly in TKGs:

https://github.com/kubernetes/kubernetes/blob/d39c9aeff09e81b0148a7eafc456c87072376b01/cmd/kubeadm/app/constants/constants.go#L224-L225

Note:

# grep "up to" /var/log/cloud-init-output.log [wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

This field is in the kubeadm-config:

# kubectl get -o yaml cm -n kube-system kubeadm-config | grep timeoutFor timeoutForControlPlane: 4m0sThis config is for the Supervisor's own kubeadm cluster config.

The 4 minute timeout is the Kubernetes default, not something we set directly in TKGs:

https://github.com/kubernetes/kubernetes/blob/d39c9aeff09e81b0148a7eafc456c87072376b01/cmd/kubeadm/app/constants/constants.go#L224-L225

Note:

- We don't have a way to change the default in vSphere 7.0 prior to creating TKCs. It might be possible to change the timeout after creating a TKC, but this would need to be done per-TKC (via kubectl edit/patch). And would likely involve deleting any nodes that had the original default timeout applied to their kubeadm templates.

- 4m is just the timeoutForControlPlane, that timer doesn't start until after the VM is provisioned, booted and initialized with everything needed for kubelet to start. Provision/boot/etc time will vary, but just a sample in a test env, the time from running kubectl apply -f tkc.yaml until the wait-control-plane timer starts is about 5 minutes. So that would be about 9 minutes total for the LB to be ready in this example.

- It's a limitation of Active/Active Service Engine configuration with Distribution when building TKGS Guest Clusters.

Resolution

ClusterClasses are included in vSphere 8.0 and have the ability to change the kubeadm config, including timeoutForControlPlane. The option is to use Cluster Class directly in vSphere 8.0, instead of a TKC spec in order to avoid this issue.

ClusterClass is an upstream/OSS component of Cluster API core. It works with CAPV, as well as the other providers (AWS, Azure, etc). It's design was originally based on TKC's and implemented by engineers from VMware and other vendors through the CAPI/Kubernetes standard design/implementation processes.

VMware has rebuilt TKGs/TKC on top of the ClusterClass model in vSphere 8.0. In future versions of vSphere/TKGs we plan to migrate customers to using ClusterClasses directly and deprecate the use of TKC specs.

Note: Without the mentioned ClusterClass changes, the default timeoutForControlPlane in vSphere 8.0 is still 4m.

----------------------------------------------------------------------------------------

More details on the procedure to use ClusterClass in vSphere 8.0:

The default ClusterClass used by TKCs is currently immutable in a live environment. The reason is each vSphere release that follows may update the default ClusterClass, in which case changes would be overwritten. But the team has been having discussions on how to support versioning such that customers can make modifications to the default CC.

A custom ClusterClass is mutable, as VC releases will not modify it, so it is possible to use a CC to change the timeout.

Steps would be first clone the default CC:

Edit custom-cc.yaml, changing the name ("tanzukubernetescluster" to "custom-cc") and adding the following 3 lines to change timeout to 25 minutes:

Create the new CC:

Creating clusters with the custom CC would use a cluster.x-k8s.io/Cluster spec rather than run.tanzu.vmware.com/TanzuKubernetesCluster. The spec is a different structure, but values are the same what you'd see in a TKC spec (TKr version, # of control plane/worker nodes, storage class, vm class, etc.)

And the default timeoutForControlPlane value for all CP/worker nodes created by this CC will be 25m.

Workaround:

Create an extra SE before creating a TKC and that should take care of it.

ClusterClass is an upstream/OSS component of Cluster API core. It works with CAPV, as well as the other providers (AWS, Azure, etc). It's design was originally based on TKC's and implemented by engineers from VMware and other vendors through the CAPI/Kubernetes standard design/implementation processes.

VMware has rebuilt TKGs/TKC on top of the ClusterClass model in vSphere 8.0. In future versions of vSphere/TKGs we plan to migrate customers to using ClusterClasses directly and deprecate the use of TKC specs.

Note: Without the mentioned ClusterClass changes, the default timeoutForControlPlane in vSphere 8.0 is still 4m.

----------------------------------------------------------------------------------------

More details on the procedure to use ClusterClass in vSphere 8.0:

The default ClusterClass used by TKCs is currently immutable in a live environment. The reason is each vSphere release that follows may update the default ClusterClass, in which case changes would be overwritten. But the team has been having discussions on how to support versioning such that customers can make modifications to the default CC.

A custom ClusterClass is mutable, as VC releases will not modify it, so it is possible to use a CC to change the timeout.

Steps would be first clone the default CC:

# kubectl get -o yaml clusterclass tanzukubernetescluster > custom-cc.yaml

Edit custom-cc.yaml, changing the name ("tanzukubernetescluster" to "custom-cc") and adding the following 3 lines to change timeout to 25 minutes:

- name: tkrConfiguration

enabledIf: "{{ if .TKR_DATA }}true{{end}}"

definitions:

- selector:

apiVersion: controlplane.cluster.x-k8s.io/v1beta1

kind: KubeadmControlPlaneTemplate

matchResources:

controlPlane: true

jsonPatches:

- op: add

path: /spec/template/spec/kubeadmConfigSpec/clusterConfiguration/apiServer/timeoutForControlPlane

value: 25m # default is 4m

Create the new CC:

# kubectl apply -f custom-cc.yaml

Creating clusters with the custom CC would use a cluster.x-k8s.io/Cluster spec rather than run.tanzu.vmware.com/TanzuKubernetesCluster. The spec is a different structure, but values are the same what you'd see in a TKC spec (TKr version, # of control plane/worker nodes, storage class, vm class, etc.)

And the default timeoutForControlPlane value for all CP/worker nodes created by this CC will be 25m.

Workaround:

Create an extra SE before creating a TKC and that should take care of it.

Additional Information

Impact/Risks:

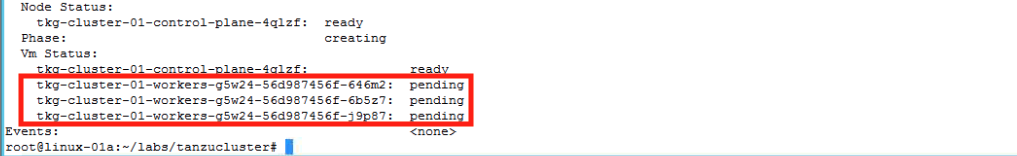

The first TKC will not be created if we don't have an extra SE. It'll stuck on "creating" phase and the nodes will get stuck on "pending" state.

The first TKC will not be created if we don't have an extra SE. It'll stuck on "creating" phase and the nodes will get stuck on "pending" state.

Feedback

Yes

No