Creating a TKGI cluster fails with Invalid CPI response - SchemaValidationError

Article ID: 298720

Updated On:

Products

VMware Tanzu Kubernetes Grid Integrated Edition

Issue/Introduction

This issue was reported while debugging an environment with the following versions:

Creating a TKGI cluster or running a smoke-test errand fails with the following error:

The bosh task debug logs show that the operation failed when the CPI invoked attach_disk, create_disk, or set_vm_metadata like methods. For example:

- Ops Manager - v2.10.15

- TKGI - v1.9.5

- vSphere CPI - v60

- vSphere stemcell - v621.131

- Networking - vSphere Networking

Creating a TKGI cluster or running a smoke-test errand fails with the following error:

Task 381 | 19:06:02 | L executing post-stop: master/<ID> (1) (00:00:22) L Error: Invalid CPI response - SchemaValidationError: Expected instance of Hash, given instance of NilClass

The bosh task debug logs show that the operation failed when the CPI invoked attach_disk, create_disk, or set_vm_metadata like methods. For example:

D, [2021-06-22T19:06:10.988693 #6144] [instance_update(master/<ID> (1))] DEBUG -- DirectorJobRunner: [external-cpi] [cpi-792807] request: {"method":"attach_disk","arguments":["vm-<ID>","disk-<ID>"],"context":{"director_uuid":"<ID>","request_id":"cpi-<ID>","vm":{"stemcell":{"api_version":3}},"datacenters":"<redacted>","default_disk_type":"<redacted>","host":"<redacted>","password":"<redacted>","user":"<redacted>"},"api_version":1} with command: /var/vcap/jobs/vsphere_cpi/bin/cpi

D, [2021-06-22T19:06:11.499431 #6144] [instance_update(master/<ID> (1))] DEBUG -- DirectorJobRunner: [external-cpi] [cpi-792807] response: , err: , exit_status: pid 18445 exit 245

<logs omitted for brevity>

E, [2021-06-22T19:06:11.506138 #6144] [instance_update(master/<ID> (1))] ERROR -- DirectorJobRunner: Error updating instance: #<Bosh::Clouds::ExternalCpi::InvalidResponse: Invalid CPI response - SchemaValidationError: Expected instance of Hash, given instance of NilClass>

/var/vcap/data/packages/director/9ab6cf0d054129da2585c3d01c752015589a85c7/gem_home/ruby/2.6.0/gems/bosh-director-0.0.0/lib/cloud/external_cpi.rb:240:in `rescue in validate_response'

/var/vcap/data/packages/director/9ab6cf0d054129da2585c3d01c752015589a85c7/gem_home/ruby/2.6.0/gems/bosh-director-0.0.0/lib/cloud/external_cpi.rb:237:in `validate_response'

/var/vcap/data/packages/director/9ab6cf0d054129da2585c3d01c752015589a85c7/gem_home/ruby/2.6.0/gems/bosh-director-0.0.0/lib/cloud/external_cpi.rb:103:in `invoke_cpi_method'

/var/vcap/data/packages/director/9ab6cf0d054129da2585c3d01c752015589a85c7/gem_home/ruby/2.6.0/gems/bosh-director-0.0.0/lib/cloud/external_cpi.rb:67:in `attach_disk'

Make note of the attach_disk/create_disk/set_vm_metadata & PID references from the logs as they will assist in resolving this issue.

Environment

Product Version: 1.9

OS: Linux

OS: Linux

Resolution

Note: There is no single solution to this problem. The following resolution and debugging steps will help in determining specific scenarios as per your environment.

The artifacts shared in this article show that a process with PID (18445) exited with a 245 error code. This PID is the ID of the vsphere_cpi process that was executing CPI operations during cluster creation or smoke-tests errand execution. This is the point where you might want to find out why that process exited. Unfortunately, CPI logs are not that descriptive in terms of showing if an external entity was involved in making the vsphere_cpi exit and the whole operation fail. However, you can still look into the system logs on the bosh director VM (where the CPI component exists).

Debugging one such scenario on the Bosh director VM, we used the following debugging steps and found that an external security agent was installed on the Bosh director VM and was injecting to the vsphere_cpi process during its execution. This caused the cpi process to crash.

The artifacts shared in this article show that a process with PID (18445) exited with a 245 error code. This PID is the ID of the vsphere_cpi process that was executing CPI operations during cluster creation or smoke-tests errand execution. This is the point where you might want to find out why that process exited. Unfortunately, CPI logs are not that descriptive in terms of showing if an external entity was involved in making the vsphere_cpi exit and the whole operation fail. However, you can still look into the system logs on the bosh director VM (where the CPI component exists).

Debugging one such scenario on the Bosh director VM, we used the following debugging steps and found that an external security agent was installed on the Bosh director VM and was injecting to the vsphere_cpi process during its execution. This caused the cpi process to crash.

- On the Bosh director VM, execute htop to monitor the statistics of the processes running the VM.

- Try executing a cluster creation or running the smoke-tests errand to see how the vsphere CPI process behaves around that time.

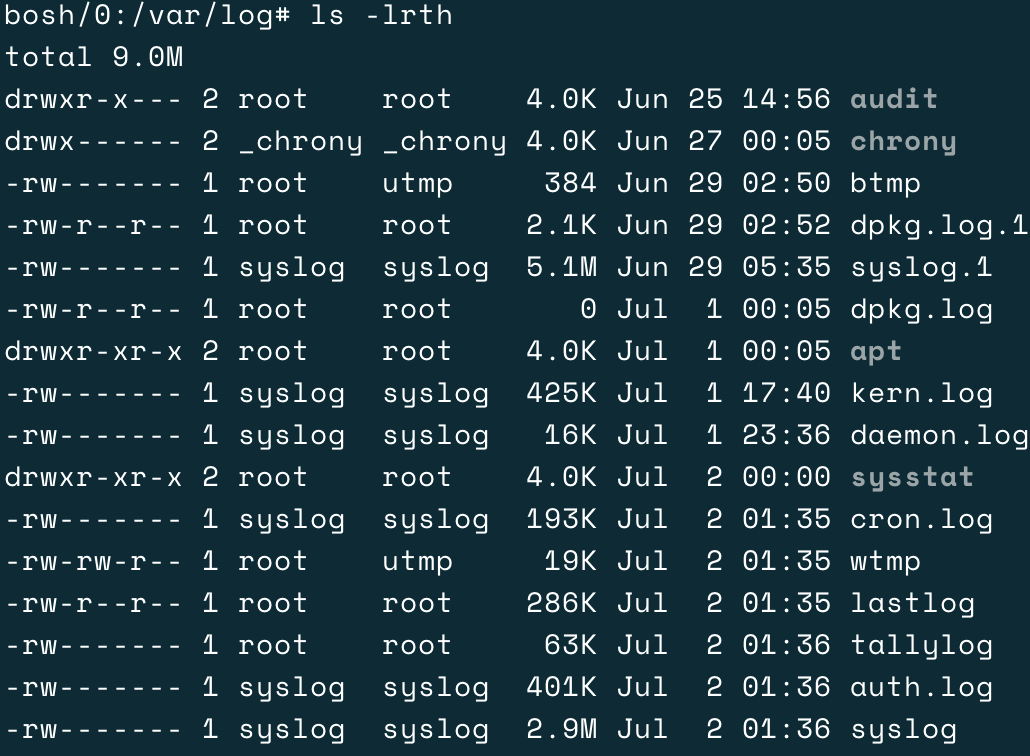

- Check the /var/log directory to see if you can spot a sub-directory that's not typically present for a vanilla TKGI installation. For example, here is what sub-directories look like on a vanilla bosh director VM (no addons or additional software installed):

- In one such scenario, we saw that the bosh director had some extra daemons. These were from Palo Alto Networks - Cortex XDR security agent and were running on the system (namely pmd, dypd) and logging information under the /var/log/traps directory

- Matching the timeline of the failure with the logs from the extra daemons, we were able to spot some activity being performed on the vsphere_cpi PID (/var/log/traps/dypd.log). For example:

<Info> af356e5f-42ef-4c10-57dc-a8cdc84e1a83 [6647:17220 ] {dypd:DYPD:Inject:18445-ruby:Initialize:} Executable changed, canceling injection

<Info> af356e5f-42ef-4c10-57dc-a8cdc84e1a83 [18447:18447 ] {pmd:AppArmor} Checking for 18445

<Info> af356e5f-42ef-4c10-57dc-a8cdc84e1a83 [6647:18139 ] {dypd:DYPD:Inject:18445-ruby:} Injecting

<Warning> af356e5f-42ef-4c10-57dc-a8cdc84e1a83 [6647:18139 ] {dypd:DYPD:Inject:18445-ruby:Initialize:} Can't inject before process started properly

<Info> af356e5f-42ef-4c10-57dc-a8cdc84e1a83 [18453:18453 ] {pmd:AppArmor} Checking for 18445

- To determine if these extra daemons were indeed the issues causing the processes, we temporarily disabled the daemons using the instructions here and saw that the subsequent cluster creations and smoke-tests errand completed without any issues.

Feedback

Yes

No