NSX-T pool members are removed and not added back when router, diego_brain, or mysql_proxy VMs are recreated in Tanzu Application Service in vSphere with NSX-T networking

Article ID: 298469

Updated On:

Products

VMware Tanzu Application Service for VMs

Issue/Introduction

The issue discussed in this article is applicable to the following environment:

The Apply changes of TAS (with Recreate all bosh deployed VMs option checked) failed to update the mysql_monitor VM with the following error:

Upon investigating the replication-canary job logs (/var/vcap/sys/log/replication-canary/replication-canary.stdout.log), you find the following log events reporting 502 bad gateway errors.

For example:

This is how the Unicode conversion of the body contents from the error message above looks like:

The above error message also indicates that UAA was not reachable from mysql_monitor and upon looking further you will find out that the virtual server sitting in front of the foundation has no pool members in the router server pool. So the request made to the LB but the LB didn't know what router this traffic should be forwarded to and only the router instances know about the route and IP address of UAA.

The issue actually happened when the router VMs were recreated. The pool members were deleted from NSX-T but never created again after the new instances of the router were created. This is the same scenario you would observe upon VM recreation with Diego brain VMs and MySQL Proxy VMs if they have backing server pools configured as well.

Based on the documents around how to configure an NSX-T load balancer for TAS as well as what changes are required on the Resource Config section of the TAS tile, you will find that the JSON payload required for adding server pool configurations in the resource config section of TAS tile is missing.

- NSX-T Version - v3.1.0

- Ops Manager - v2.10.2

- Tanzu Application Service (TAS) - v2.10.8

The Apply changes of TAS (with Recreate all bosh deployed VMs option checked) failed to update the mysql_monitor VM with the following error:

Task 4752 | 03:42:33 | Error: 'mysql_monitor/24d4c90c-0db8-4bbe-8049-182a797e13c0 (0)' is not running after update. Review logs for failed jobs: replication-canary

Upon investigating the replication-canary job logs (/var/vcap/sys/log/replication-canary/replication-canary.stdout.log), you find the following log events reporting 502 bad gateway errors.

For example:

{"timestamp":"2021-01-22T00:06:51.905981397Z","level":"info","source":"/var/vcap/packages/replication-canary/bin/replication-canary","message":"/var/vcap/packages/replication-canary/bin/replication-canary.uaa-client.fetch-token-from-uaa-start","data":{"endpoint":{"Scheme":"https","Opaque":"","User":null,"Host":"uaa.<system-domain>","Path":"/oauth/token","RawPath":"","ForceQuery":false,"RawQuery":"","Fragment":""},"session":"3"}}

{"timestamp":"2021-01-22T00:06:51.927936629Z","level":"info","source":"/var/vcap/packages/replication-canary/bin/replication-canary","message":"/var/vcap/packages/replication-canary/bin/replication-canary.uaa-client.fetch-token-from-uaa-end","data":{"session":"3","status-code":502}}

{"timestamp":"2021-01-22T00:06:51.928001465Z","level":"error","source":"/var/vcap/packages/replication-canary/bin/replication-canary","message":"/var/vcap/packages/replication-canary/bin/replication-canary.uaa-client.error-fetching-token","data":{"error":"status code: 502, body: \u003chtml\u003e\r\n\u003chead\u003e\u003ctitle\u003e502 Bad Gateway\u003c/title\u003e\u003c/head\u003e\r\n\u003cbody bgcolor=\"white\"\u003e\r\n\u003ccenter\u003e\u003ch1\u003e502 Bad Gateway\u003c/h1\u003e\u003c/center\u003e\r\n\u003chr\u003e\u003ccenter\u003eNSX LB\u003c/center\u003e\r\n\u003c/body\u003e\r\n\u003c/html\u003e\r\n","session":"2"}}

{"timestamp":"2021-01-22T00:06:51.928042157Z","level":"fatal","source":"/var/vcap/packages/replication-canary/bin/replication-canary","message":"/var/vcap/packages/replication-canary/bin/replication-canary.Failed to register UAA client","data":{"error":"status code: 502, body: \u003chtml\u003e\r\n\u003chead\u003e\u003ctitle\u003e502 Bad Gateway\u003c/title\u003e\u003c/head\u003e\r\n\u003cbody bgcolor=\"white\"\u003e\r\n\u003ccenter\u003e\u003ch1\u003e502 Bad Gateway\u003c/h1\u003e\u003c/center\u003e\r\n\u003chr\u003e\u003ccenter\u003eNSX LB\u003c/center\u003e\r\n\u003c/body\u003e\r\n\u003c/html\u003e\r\n","trace":"goroutine 1 [running]:\ncode.cloudfoundry.org/lager.(*logger).Fatal(0xc00008c3c0, 0x80b697, 0x1d, 0x883580, 0xc00001e390, 0x0, 0x0, 0x0)\n\t/var/vcap/data/compile/replication-canary/replication-canary/vendor/code.cloudfoundry.org/lager/logger.go:138 +0xc6\nmain.main()\n\t/var/vcap/data/compile/replication-canary/replication-canary/main.go:99 +0x1698\n"}}

This is how the Unicode conversion of the body contents from the error message above looks like:

<html> <head><title>502 Bad Gateway</title></head> <body bgcolor="white"> <center><h1>502 Bad Gateway</h1></center> <hr><center>NSX LB</center> </body> </html>

The above error message also indicates that UAA was not reachable from mysql_monitor and upon looking further you will find out that the virtual server sitting in front of the foundation has no pool members in the router server pool. So the request made to the LB but the LB didn't know what router this traffic should be forwarded to and only the router instances know about the route and IP address of UAA.

The issue actually happened when the router VMs were recreated. The pool members were deleted from NSX-T but never created again after the new instances of the router were created. This is the same scenario you would observe upon VM recreation with Diego brain VMs and MySQL Proxy VMs if they have backing server pools configured as well.

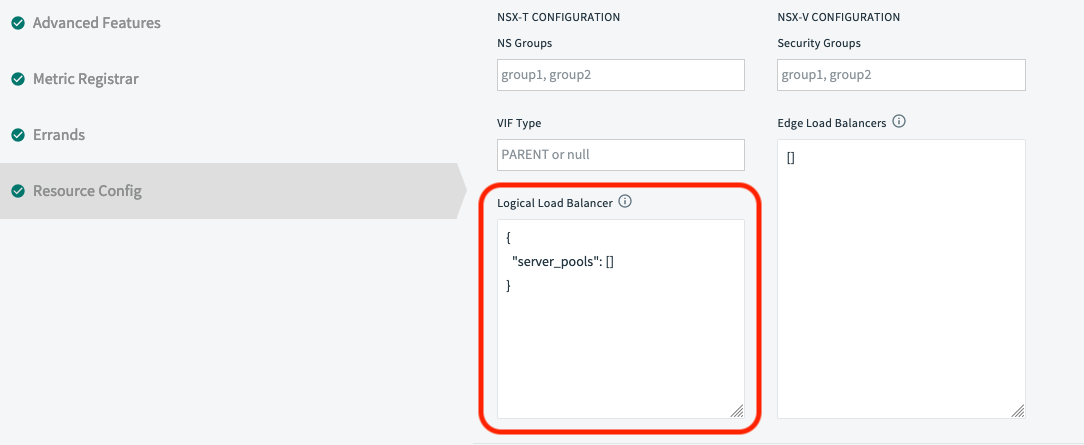

Based on the documents around how to configure an NSX-T load balancer for TAS as well as what changes are required on the Resource Config section of the TAS tile, you will find that the JSON payload required for adding server pool configurations in the resource config section of TAS tile is missing.

- Docs links:

- The following screenshot from the router instance group shows what a misconfigured instance group will look like in the TAS tile:

Environment

Product Version: 2.10

OS: Linux

OS: Linux

Resolution

Gather details from your NSX-T environment around which server pools are having the problem and determine the following 2 details:

After saving the changes and during the next Apply changes, you will be able to see the following change displayed on the UI when the Ops Manager displays the manifest difference:

To further validate that the pool members are added back automatically, you can check the NSX-T manager UI once the newly created VM (router, diego_brain, or mysql_proxy) is successfully updated.

- Server pool name

- Port number

{

"server_pools": [

{

"name": "<server-pool-name>",

"port": 443

}

]

}

After saving the changes and during the next Apply changes, you will be able to see the following change displayed on the UI when the Ops Manager displays the manifest difference:

vm_extensions: + - cloud_properties: + nsxt: + lb: + server_pools: + - name: <server-pool-name> + port: 443 + name: vm-extension-router-<ID> instance_groups: - name: router + vm_extensions: + - vm-extension-router-<ID>

To further validate that the pool members are added back automatically, you can check the NSX-T manager UI once the newly created VM (router, diego_brain, or mysql_proxy) is successfully updated.

Feedback

Yes

No